Overblown datacenter energy concerns

Computing needs a lot of electricity, but those needs are flexible and often fleeting

In the US, electricity demand growth has been a hot topic of conversation. Electricity demand is expected to rise 1.5% annually through 2026 and beyond thanks to a manufacturing boom and electrifying transportation and buildings. About a third of the growth is expected to come from data centers as the rise of crypto mining and artificial intelligence continues to accelerate. I’m finding there is a storm of confusion around how data centers and software companies operate as some utilities use the data center boogie man to try and push through projects to build new gas plants or blame their inability to keep up with growth on unreasonable demands for clean energy. Let’s talk about how data centers are different from other high electricity users and how that should be impacting the conversation around load growth.

The grid and the Internet are engineering marvels

To understand where we’re going, it’s helpful to know where we’ve been. The electric grid and the Internet are backed by some of the most impressive engineering in human history and come from two different eras. Their history informs the mindset of the people who work on them.

The electric grid was born out of the chaos following Thomas Edison’s Pearl Street Station which assumed power generation would come from a central source and be distributed through wires that connected every electricity consumer. When the generation was no longer powerful enough to power consumers, it was time to build more electricity generation and lines. Electricity provided such a huge productivity benefit to society that having a reliable electricity source became table stakes for any business. The ensuing decades saw folks like Samuel Insull realize there was a great benefit to consolidating generation and transmission. However, the anti-trust political climate at the time meant partnering with the government early on. This regulated approach locked in the paradigm of increasingly large generation sources that pushed increasingly high voltage over long distances to light every home in the US. Redundancy is expensive and often difficult to build because of this model, so engineers have done an exceptional job over the past century of building highly reliable infrastructure that withstands severe weather and can route the flow of power in creative ways when a failure does occur to avoid large-scale blackouts. Engineering the electric grid is an exercise in precision where a misstep costs millions of dollars (or more) and plans are measured in years.

The Internet grew up quite differently. Most early Internet applications were related to communication and didn’t function as well as a wired telephone or standard mail. Protocols were developed to travel over different types of wires to maximize reach on the back of existing infrastructure, leading to decentralized ownership of the Internet itself. This fateful decision resulted in a nearly completely deregulated approach that brought on new challenges such as denial-of-service attacks where a user would intentionally overwhelm a system with unprofitable requests in order to make the system appear offline. These sorts of attacks created a need to build in resilience by segmenting the network into smaller pieces and building out distributed content delivery networks (CDN) that spread a service to multiple locations making it harder to attack. As data sets continued to grow, the Internet giants of the 2000s deployed distributed software systems like Apache Hadoop to overcome reliability issues in the underlying hardware systems, sparking the rise of applications that were assumed to run on multiple servers at once. In 2006, Amazon pioneered the concept of renting out computing resources in an on-demand fashion that we now understand as cloud computing. Cloud providers then became points of electricity optimization as they moved from renting bare metal servers that were poorly utilized to shared infrastructure that could be packed in with customer-owned software. From there, they optimized the servers underneath, moving away from traditional x86 architectures that focus on computing speed to low-power ARM-based designs, like those found in cell phones, to reduce electricity consumption without sacrificing compute speed. These optimizations have made it so the data center explosion since 2006 hasn’t caused an appreciable increase in electricity consumption in the US. Artificial intelligence and cryptocurrency could change this, but it is not a certainty.

Tech and Utilities need each other

I believe this impedance mismatch between the nature of grid engineering and software engineering is responsible for the building narrative that growing demand from data centers requires keeping fossil fuel plants online. On one side, grid engineers rightly want to avoid being caught in a situation where there isn’t enough generation to meet the load. On the other, software engineers know that they can often move a load from one building or region to another for a few hours or days with no adverse impact on their clients. What’s missing is the collaboration to understand each other’s plight.

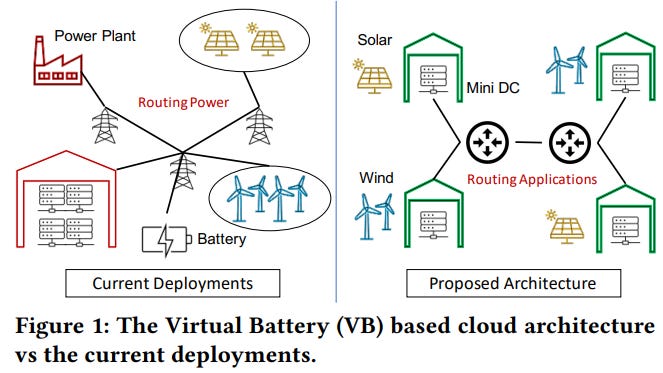

Microsoft, for example, published a paper in 2021 about how they plan to redesign their data centers using a concept called a Virtual Battery where load is moved to match the intermittency of renewable resources. Utilities could require this sort of data center design for sending power to Microsoft and simplify their transmission infrastructure needs immensely.

More recently, Google published a blog in 2023 about its activity as a demand response partner to push workloads away from areas with temporarily stressed grids. Cloudflare already sells access to compute resources that they guarantee are 100% powered by renewable energy in exchange for permission to float the workload to a compliant data center. There’s an appetite from the data center side to make this happen.

The collaboration model is lacking though. Microsoft excoriated Georgia Power in the public comments for their proposed gas plant expansion to meet new workloads. They even went so far as to criticize Georgia Power for planning capacity for workloads that have only been proposed but not yet committed to the region, showing a clear lack of understanding of the culture and timelines associated with building out the electric grid. We don’t know if there are behind-the-scenes conversations, but this sort of tone indicates nothing productive is happening below board between the two.

Data centers do not have to be built in a particular state. They often do so because of tax incentives and geographic preference for disaster recovery purposes. States in the Southeast US, where vertically integrated monopoly utilities are common, are a clear target for new data centers and the growth of manufacturing in this region has been booming. Data centers make an excellent industrial complement to manufacturing plants as generation must be built for each, however, data centers are a more flexible load during times of stress that can shave peaks and fill valleys left by, for instance, car manufacturers that lose significant revenue by shutting down and must be made whole.

The central question for me is can tech hubris and utility conservatism meet in the middle to do what is best for consumers? It would be a huge loss for other ratepayers if these cash-rich tech companies decided to build their own generation and go after microgrids. Their power purchase agreements are invaluable for bringing solar and wind down the cost curve in the US. Making microgrids at their data centers would also be a loss for tech companies who get electricity cheaper today than it costs to build and maintain a microgrid, especially considering they are not culturally prepared for the regulatory scrutiny they would receive generating their own power. The utilities seem to lose in the short term because they would not get to build capital-intensive gas plants, but there are surely creative solutions like proposing data center operators pay for transmission projects up front in exchange for a lower rate in the medium term. At the risk of quoting Gellert Grindelwald, I am hopeful that we can bring these two marvels of engineering together for the greater good.